Do you find it hard to understand how computers handle language? Large Language Models use neural networks to generate and classify text. This blog explains how LLMs impact natural language processing.

Discover their role today.

Key Takeaways

- Powerful Text Creation: GPT-3 has 175 billion parameters. It writes text like humans. It helps make chatbots and virtual assistants better.

- Advanced Training: Training a 12-billion-parameter model used 72,300 A100-GPU-hours in 2023. LLMs use large datasets and tokenization to learn language.

- Many Uses: LLMs help write articles, create software code, and run customer service chatbots. Tools like ChatGPT and GitHub Copilot use these models.

- New Technologies: Transformers and mixture of experts make LLMs work well. Reinforcement learning from human feedback improves their accuracy.

- Challenges to Face: LLMs can show biases and risk privacy. They need careful handling to keep information safe and treat everyone fairly.

Core Functions of Large Language Models (LLMs)

Large Language Models excel at creating coherent text and categorizing information with high accuracy. They can also answer questions by drawing from vast knowledge bases, enhancing various applications in natural language processing.

Language generation

LLMs excel in text generation by predicting the next word. GPT-2, released in 2019 with 1.5 billion parameters, showed strong capabilities but had biases from training data. GPT-3, introduced in 2020 with 175 billion parameters, improved text quality and coherence.

These generative AI systems use transformer models and deep learning to create human-like text. They power chatbots, content creation, and virtual assistants. LLMs develop predictive abilities but inherit inaccuracies and biases, affecting their outputs.

GPT-3 can write text that is often indistinguishable from human writing.

Text classification

After generating language, models can sort text into categories. BERT, introduced in 2018, changed how this works. Fine-tuning lets models handle specific tasks like sentiment analysis or topic sorting.

Text classification helps in spam detection, organizing documents, and understanding customer feedback. By using word embeddings and transformer architecture, models accurately classify large amounts of data.

This process improves natural language processing applications and makes information easier to manage.

Knowledge base answering

Large Language Models (LLMs) like GPT-4 improve knowledge base answering in natural language processing. They use datasets such as TruthfulQA and SQuAD to train for accurate question answering.

In 2023, GPT-4 launched with enhanced accuracy, making it better at retrieving information. LLMs apply retrieval-augmented generation to access and answer queries from large databases.

Businesses use LLMs for customer support, enabling quick and precise responses.

How LLMs are Trained

Training LLMs uses large text datasets. It involves processing text and creating synthetic examples for the model to learn.

Dataset preprocessing

Dataset preprocessing readies data for large language models. It cleans and organizes text for machine learning. Data from books, websites, and more is filtered and formatted. In 2023, training a 12-billion-parameter LLM used 72,300 A100-GPU-hours.

Tokenization breaks text into tokens, helping models learn language. Good preprocessing makes sure LLMs understand and create accurate text.

Data is the new oil.

Tokenization

Tokenization splits text into smaller units called tokens, such as words or subwords. Large language models like BERT and GPT use tokens to process and generate language. Transformer-based LLMs require 6 FLOPs per parameter for each token during training.

During inference, they need only 1 to 2 FLOPs per parameter per token. Effective tokenization enhances tasks like text classification, code generation, and conversational AI. Tools like natural language prompts and programming languages rely on precise tokenization to ensure accurate understanding and response.

Synthetic data generation

Synthetic data generation creates additional data that mimics real-world information. This process expands training datasets for large language models (LLMs). By generating more text examples, models learn better and perform tasks like text classification and language generation more accurately.

Synthetic data also supports techniques like post-training quantization, which reduces the space models need. Tools like generative pre-trained transformers and neural networks help produce high-quality synthetic data.

This method ensures LLMs have the diverse information they need to understand and generate human language effectively.

Next, explore the latest innovations in LLM architectures.

Innovations in LLM Architectures

New LLM architectures use transformers and self-attention to improve language understanding and apply mixture of experts and reinforcement learning from human feedback to enhance their performance.

Read on to discover more.

Transformers and self-attention mechanisms

Transformers use self-attention mechanisms to understand the relationships between words in a sentence. Each parameter in a transformer requires six FLOPs per token during training.

For inference, transformers need one to two FLOPs per parameter per token. This efficiency supports large language models like BERT (bidirectional encoder representations from transformers).

Transformers include feedforward layers and belong to neural networks. They handle complex language tasks by analyzing data in parallel, making them powerful tools in natural language processing.

Mixture of experts

Mixture of experts divides a large language model into smaller, specialized parts. Each expert handles different tasks, making the model more efficient. For example, LLaMA 3 uses 70 billion parameters to manage various language functions.

This setup lowers the need for heavy computing power and speeds up processes like text generation and classification. By using neural networks and advanced learning algorithms, mixture of experts enhances the performance of large language models.

This architecture supports zero-shot and few-shot learning by allowing experts to focus on specific areas. Models like BLOOM and LLaMA benefit from this approach, offering better content generation and understanding.

Mixture of experts also helps reduce algorithmic bias by assigning tasks to the most suitable experts. As a result, large language models become more accurate and reliable in applications such as conversational AI and code generation.

Reinforcement learning from human feedback (RLHF)

Reinforcement learning from human feedback (RLHF) scales model performance effectively. Using the Chinchilla scaling law, RLHF balances parameters (N) and data (D) to optimize cost (C) and loss (L).

Humans review and rank the model’s outputs, guiding large language models like Llama 2 and GPT-3.5 to improve accuracy. This method enhances tasks such as text classification and knowledge base answering by aligning AI responses with human preferences.

Applications of LLMs in Natural Language Processing

LLMs help create articles and generate software code. They also power smart chatbots and tools for translating languages.

Copywriting and content creation

Artificial intelligence powers large language models (LLMs) like GPT-3 and GPT-4 in copywriting and content creation. These models generate high-quality text for ads, blogs, and social media posts.

Companies use LLMs to produce engaging content quickly, enhancing their marketing efforts.

Each token generated by an LLM costs 1 to 2 FLOPs per parameter, ensuring efficient scalability. Generative pretrained transformers make content creation faster and more reliable. With advancements in natural language processing, businesses can maintain consistent and effective communication across various platforms.

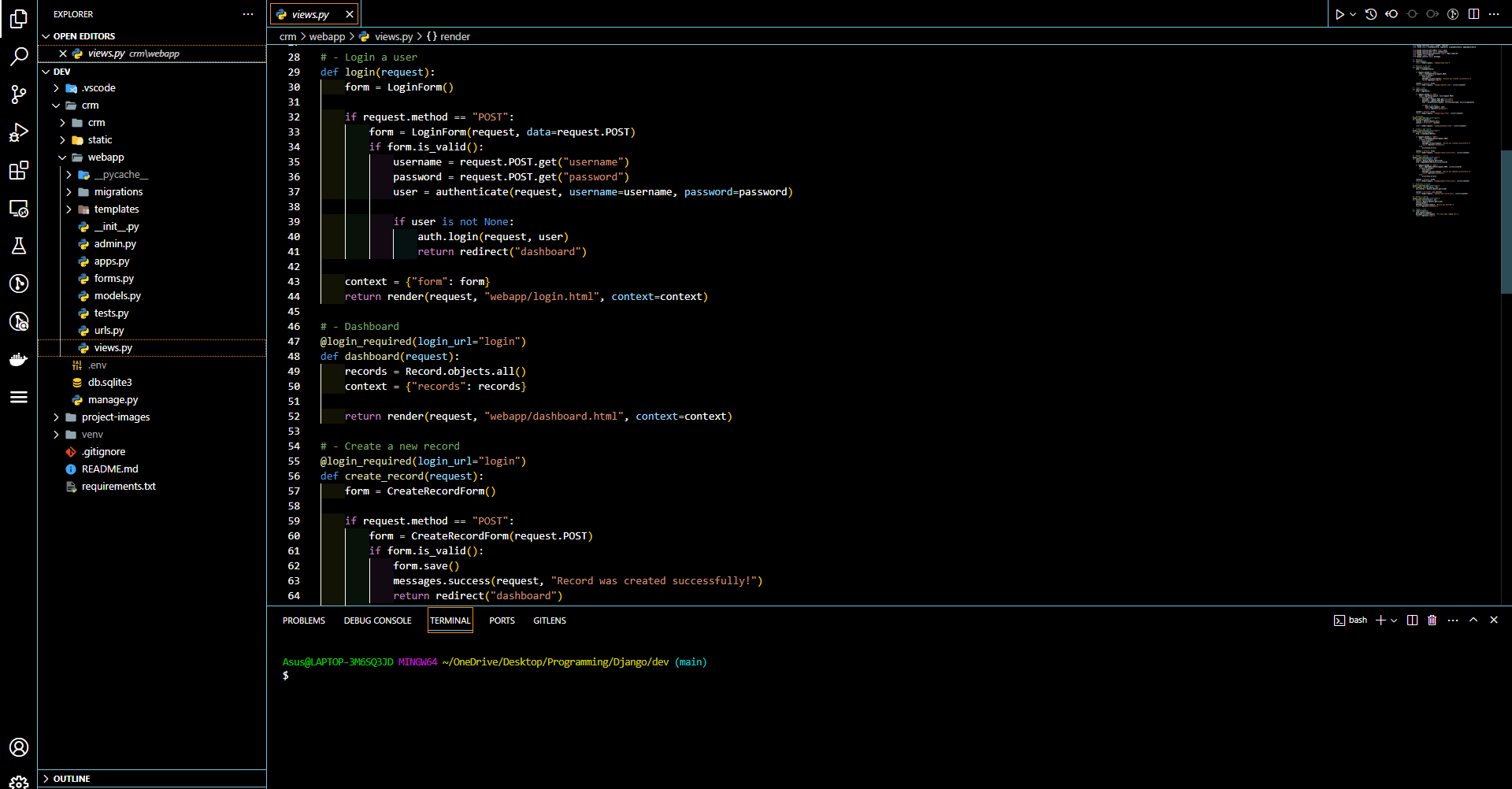

Code generation

LLMs excel in code generation. They are fine-tuned to create programs in languages like Python, Java, and C++. Training a 12-billion-parameter LLM required 72,300 A100-GPU-hours in 2023.

These models use neural networks and unsupervised learning to understand and write code. Developers use LLMs to generate scripts, debug software, and automate repetitive tasks. Tools such as GitHub Copilot and OpenAI’s Codex rely on LLMs to help programmers, boosting productivity and reducing errors.

Conversational AI

After generating code, large language models enhance Conversational AI. ChatGPT was released in 2022, changing how chatbots work. GPT-4 launched in 2023 with better accuracy. These AI chatbots understand and reply like humans.

They help in customer service, education, and more. Tools like Google Assistant and Bing Chat use LLMs. Users interact with AI through speech and text. Conversational AI uses neural networks to process language.

It answers questions, gives recommendations, and solves problems. Prompt engineering and instruction tuning improve their responses. Companies use LLMs for better communication and support.

Challenges and Limitations of LLMs

Large language models can show biases, which impacts fairness in artificial intelligence. They also create privacy and security concerns in natural language processing.

Algorithmic bias and fairness

LLMs show biases like stereotypes and political favoritism. These biases come from the data used to train AI models. Fairness means making AI treat everyone equally. Developers reduce bias by using better data and testing models carefully.

Ensuring fairness helps improve natural language processing applications for all users.

Security and privacy concerns

Large language models (LLMs) use neural networks to learn from vast amounts of data. They can memorize training data and may accidentally repeat private information. This poses privacy risks if sensitive data is exposed.

For example, an LLM might output parts of confidential documents it was trained on.

LLMs also pose security threats by enabling the spread of misinformation. They can generate fake news or harmful content quickly. Bad actors might use them to create instructions for dangerous activities.

These risks make security and privacy major concerns in the use of LLMs.

Interpretation and explainability

Security and privacy concerns highlight the need for clear interpretation and explainability in LLMs. Understanding how neural networks make decisions helps identify biases and ensures fairness.

Tools like TruthfulQA and SQuAD evaluate model responses, revealing strengths and weaknesses. Explainable AI allows developers to trace outputs back to specific data points, enhancing trust.

By using techniques such as retrieval augmented generation, experts can better interpret complex language models. This transparency supports ethical use and improves model reliability in natural language processing tasks.

The Future of LLMs in NLP

Future LLMs will integrate more data types like images and audio, making NLP stronger—keep reading to find out.

Increased capabilities and emergent abilities

Large language models keep improving. Emergent abilities appear when models grow. In 2024, LLaMA 3 with 70 billion parameters led open LLMs. These models use zero-shot learning to handle tasks without examples.

Emergent abilities enhance knowledge discovery and problem-solving. Scaling laws show performance jumps when model size increases. Larger language models unlock new capabilities in natural language processing.

Integration of multimodality

Multimodality enhances LLMs by allowing them to handle text, images, and audio. Flamingo enables visual question answering, and PaLM-E controls robots using multiple data types. These models use artificial neural networks to integrate different inputs effectively.

By processing various forms of information, LLMs achieve more complex tasks. DALL-E creates images from descriptions, and speech-to-text systems convert spoken language into written form.

Foundation models support these capabilities, making applications like computer programs and conversational AI more versatile.

Scaling laws and model efficiency

Scaling laws guide how large language models grow efficiently. The Chinchilla scaling law uses the formula C = 406.4 × N × D and L = 1.69 + 406.4/N^0.34 + 410.7/D^0.28. Here, C is the cost, N is the number of parameters, and D is the data size.

These equations help balance model size and data to reduce loss.

Model efficiency improves by following neural scaling laws. Large language models like Llama 3.1 and Bard use these laws to lower perplexity. Efficient models enhance tasks such as document summarization and machine translation.

By optimizing size and data, models achieve better performance in natural language processing.

Impact of LLMs on Industry and Society

Large language models help businesses work faster and smarter using artificial intelligence and neural networks. They also affect society by creating new tools and raising ethical issues.

Workplace transformation

Large language models (LLMs) are changing workplaces fast. AI can automate many tasks, making 300 million jobs vulnerable worldwide. Businesses use natural language processing (NLP) to boost productivity.

Jobs in copywriting, customer service, and data analysis are evolving. Generative AI tools, driven by neural networks, help employees work better. Companies adopt technologies like LangChain and speech to text to improve operations.

As LLMs grow, workers need to learn new skills and use advanced tools.

Public sector applications

Public sector uses AI to enhance services. IBM’s Granite models secure data and reduce AI risks. Government agencies rely on Watsonx.ai for generative AI applications. These tools use neural networks and self-supervised learning.

Public health departments analyze data with large language models. Educational systems use LLMs for content creation and support. Law enforcement agencies deploy chatbots for citizen interaction.

Analytics from LLMs improve decision-making. GPUs power these AI solutions, ensuring efficient processing. Self-supervised learning helps models understand complex language patterns.

Ethical and governance considerations

Large language models (LLMs) can reflect biases like stereotyping and political favoritism. These biases come from the data used in neural networks. Cognitive linguists study these patterns to make AI fairer.

Strong governance is needed to monitor and reduce such biases in large language models.

Security risks include using LLMs to spread misinformation or support bioterrorism. Protecting privacy is vital when deploying AI systems. Governance frameworks help prevent these abuses.

Ensuring ethical use of LLMs safeguards society from potential harms.

Applications of LLMs in Conversational AI and Chatbots

LLMs like ChatGPT, released in 2022, drive many chatbots and virtual assistants. They use neural networks to understand and create text. With GPT-4 launched in 2023, these models became more accurate.

Chatbots powered by LLMs handle customer service, answer questions, and assist users instantly. Few-shot and zero-shot prompting allow bots to address new topics without extra training.

Companies like Mistral AI develop these models to improve performance. LLMs enhance user interactions and make support services more efficient. This progress sets the stage for exploring the challenges and limitations of these technologies.

Conclusion

Large language models are transforming natural language processing. They enhance writing, coding, and conversational tools. Issues like bias and privacy need careful handling. These models will shape industries and everyday life.

Using them wisely can bring many benefits to society.

Discover more about how large language models are revolutionizing conversational AI and chatbots.

FAQs

1. What are large language models (LLMs) in artificial intelligence?

Large language models (LLMs) are advanced forms of artificial intelligence that use neural networks to understand and generate human language. They mimic how the brain processes language, making them powerful tools in natural language processing.

2. How do neural networks and recurrent neural networks enhance LLMs?

Neural networks, including recurrent neural networks (RNNs), help LLMs learn patterns in language. RNNs use long short-term memory to remember information, allowing LLMs to generate more accurate and coherent text.

3. What is few-shot prompting in the context of LLMs?

Few-shot prompting allows LLMs to learn from just a few examples. This makes them more adaptable and efficient, enabling them to handle various language tasks without needing extensive training data.

4. How do large language models impact cognitive linguistics and the theory of language?

LLMs draw from cognitive linguistics and the theory of language to better understand and generate human-like text. By simulating how the brain works, they improve the accuracy and relevance of their language processing.

5. Can you provide examples from the list of large language models?

Yes, some of the largest language models include GPT-4, BERT, and others developed by leading AI organizations. These models use multimodal approaches and probabilistic context-free grammar to handle complex language tasks effectively.